Summary of Contents for Nvidia MCX651105A-EDAT

- Page 1 NVIDIA ConnectX-6 InfiniBand/Ethernet Adapter Cards User Manual Exported on Nov/09/2023 12:30 PM https://docs.nvidia.com/networking/x/zYWmBQ...

-

Page 2: Table Of Contents

Table of Contents Introduction............................11 Product Overview ...............................11 ConnectX-6 PCIe x8 Card ..........................12 ConnectX-6 PCIe x16 Card ..........................14 ConnectX-6 DE PCIe x16 Card ..........................15 ConnectX-6 for Liquid-Cooled Intel® Server System D50TNP Platforms................16 ConnectX-6 Socket Direct™ Cards ........................17 ConnectX-6 Dual-slot Socket Direct Cards (2x PCIe x16) .................. - Page 3 Ethernet Interfaces .............................27 PCI Express Interface............................27 LED Interface ..............................28 Heatsink Interface ..............................30 SMBus Interface ..............................30 Voltage Regulators ..............................30 Hardware Installation ..........................31 Safety Warnings ..............................31 Installation Procedure Overview..........................32 System Requirements ............................33 Hardware Requirements ........................... 33 Airflow Requirements ............................34 Software Requirements ............................ 35 Safety Precautions ..............................35 Pre-Installation Checklist ............................36 Bracket Replacement Instructions ...........................36...

- Page 4 Cards for Intel Liquid-Cooled Platforms Installation Instructions ..................59 Installing the Card ............................59 Driver Installation............................ 65 Linux Driver Installation ............................65 Prerequisites..............................65 Downloading NVIDIA OFED ..........................66 Installing MLNX_OFED ............................67 Installation Script ............................67 Installation Procedure..........................70 Installation Results ............................. 73 Installation Logging .............................

- Page 5 Firmware Upgrade ............................100 VMware Driver Installation ..........................100 Software Requirements ..........................100 Installing NATIVE ESXi Driver for VMware vSphere ....................101 Removing Earlier NVIDIA Drivers ........................101 Firmware Programming ..........................102 Troubleshooting .............................103 General Troubleshooting ............................. 103 Linux Troubleshooting ............................104 Windows Troubleshooting............................

- Page 6 MCX651105A-EDAT Specifications........................... 109 MCX653105A-HDAT Specifications .......................... 111 MCX653106A-HDAT Specifications .......................... 113 MCX653105A-HDAL Specifications .......................... 115 MCX653106A-HDAL Specifications .......................... 117 MCX653105A-ECAT Specifications........................... 119 MCX653106A-ECAT Specifications........................... 121 MCX654105A-HCAT Specifications .......................... 123 MCX654106A-HCAT Specifications .......................... 125 MCX654106A-ECAT Specifications........................... 128 MCX653105A-EFAT Specifications ........................... 130 MCX653106A-EFAT Specifications ...........................

- Page 7 About This Manual This User Manual describes NVIDIA® ConnectX®-6 InfiniBand/Ethernet adapter cards. It provides details as to the interfaces of the board, specifications, required software and firmware for operating the board, and relevant documentation. Ordering Part Numbers The table below provides the ordering part numbers (OPN) for the available ConnectX-6 InfiniBand/Ethernet adapter cards.

- Page 8 This manual is intended for the installer and user of these cards. The manual assumes basic familiarity with InfiniBand and Ethernet network and architecture specifications. Technical Support Customers who purchased NVIDIA products directly from NVIDIA are invited to contact us through the following methods:...

- Page 9 (100Gb/s), HDR (200Gb/s) and NDR (400Gb/s) cables, including Direct Attach Copper cables (DACs), copper splitter cables, Active Optical Cables (AOCs) and transceivers in a wide range of lengths from 0.5m to 10km. In addition to meeting IBTA standards, NVIDIA tests every product in an end-to-end environment ensuring a Bit Error Rate of less than 1E-15. Read more at LinkX Cables and...

- Page 10 A list of the changes made to this document are provided in Document Revision History.

-

Page 11: Introduction

(HPC), storage, and datacenter applications. ConnectX-6 is a groundbreaking addition to the NVIDIA ConnectX series of industry-leading adapter cards. In addition to all the existing innovative features of past ConnectX versions, ConnectX-6 offers a number of enhancements that further improve the performance and scalability of datacenter applications. In addition, specific PCIe stand-up cards are available with a cold plate for insertion into liquid-cooled Intel®... -

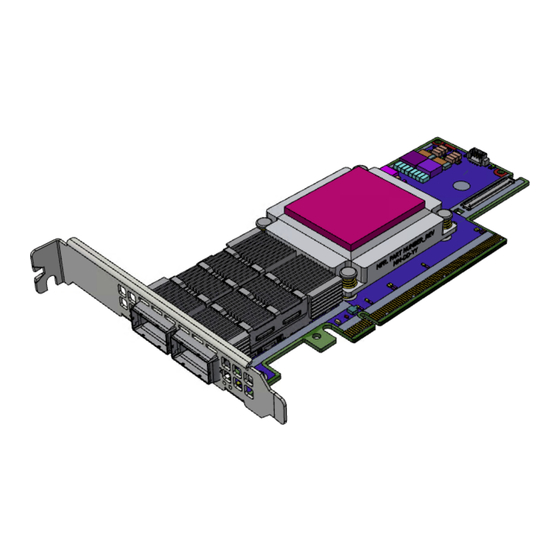

Page 12: Connectx-6 Pcie X8 Card

Configuration Marketing Description MCX653106A-ECAT ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IB and 100GbE), dual-port QSFP56, PCIe3.0/4.0 x16, tall bracket ConnectX-6 DE PCIe x16 Card MCX683105AN- ConnectX-6 DE InfiniBand adapter card, HDR, single-port QSFP, PCIe 3.0/4.0 x16, No Crypto, Tall Bracket HDAT ConnectX-6 PCIe x16 Cards for liquid-cooled Intel®... - Page 13 Part Number MCX651105A-EDAT Form Factor/Dimensions PCIe Half Height, Half Length / 167.65mm x 68.90mm Data Transmission Rate Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 Network Connector Type Single-port QSFP56 PCIe x8 through Edge Connector PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s...

-

Page 14: Connectx-6 Pcie X16 Card

ConnectX-6 PCIe x16 Card ConnectX-6 with a single PCIe x16 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 3.0 slot, or up to 200Gb/s in a PCIe Gen 4.0 slot. This form- factor is available also for Intel® Server System D50TNP Platforms where an Intel liquid-cooled cold plate is used for adapter cooling mechanism. Part Number MCX653105A-ECAT MCX653106A-ECAT... -

Page 15: Connectx-6 De Pcie X16 Card

Part Number MCX653105A-ECAT MCX653106A-ECAT MCX653105A-HDAT MCX653106A-HDAT PCIe x16 through Edge Connector PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s RoHS RoHS Compliant Adapter IC Part Number MT28908A0-XCCF-HVM ConnectX-6 DE PCIe x16 Card ConnectX-6 DE (ConnectX-6 Dx enhanced for HPC applications) with a single PCIe x16 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 3.0 slot, or up to 200Gb/s in a PCIe Gen 4.0 slot. -

Page 16: Connectx-6 For Liquid-Cooled Intel® Server System D50Tnp Platforms

ConnectX-6 for Liquid-Cooled Intel® Server System D50TNP Platforms The below cards are available with a cold plate for insertion into liquid-cooled Intel® Server System D50TNP platforms. Part Number MCX653105A-HDAL MCX653106A-HDAL Form Factor/Dimensions PCIe Half Height, Half Length / 167.65mm x 68.90mm Data Transmission Rate Ethernet: 10/25/40/50/100/200 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR... -

Page 17: Connectx-6 Socket Direct™ Cards

ConnectX-6 Dual-slot Socket Direct Cards (2x PCIe x16) In order to obtain 200Gb/s speed, NVIDIA offers ConnectX-6 Socket Direct that enable 200Gb/s connectivity also for servers with PCIe Gen 3.0 capability. The adapter’s 32-lane PCIe bus is split into two 16-lane buses, with one bus accessible through a PCIe x16 edge connector and the other bus through an x16 Auxiliary PCIe Connection card. - Page 18 Part Number MCX654105A-HCAT MCX654106A-HCAT MCX654106A-ECAT Form Factor/Dimensions Adapter Card: PCIe Half Height, Half Length / 167.65mm x 68.90mm Auxiliary PCIe Connection Card: 5.09 in. x 2.32 in. (129.30mm x 59.00mm) Two 35cm Cabline CA-II Plus harnesses Data Transmission Rate Ethernet: 10/25/40/50/100/200 Gb/s Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100...

-

Page 19: Connectx-6 Single-Slot Socket Direct Cards (2X Pcie X8 In A Row)

Part Number MCX654105A-HCAT MCX654106A-HCAT MCX654106A-ECAT RoHS RoHS Compliant Adapter IC Part Number MT28908A0-XCCF-HVM ConnectX-6 Single-slot Socket Direct Cards (2x PCIe x8 in a row) The PCIe x16 interface comprises two PCIe x8 in a row, such that each of the PCIe x8 lanes can be connected to a dedicated CPU in a dual-socket server. In such a configuration, Socket Direct brings lower latency and lower CPU utilization as the direct connection from each CPU to the network means the interconnect can bypass a QPI (UPI) and the other CPU, optimizing performance and improving latency. -

Page 20: Package Contents

PCIe x16 through Edge Connector PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s Socket Direct 2x8 in a row RoHS RoHS Compliant Adapter IC Part Number MT28908A0-XCCF-HVM Package Contents ConnectX-6 PCIe x8/x16 Adapter Cards Applies to MCX651105A-EDAT, MCX653105A-ECAT, MCX653106A-ECAT, MCX653105A-HDAT, MCX653106A-HDAT, MCX653105A-EFAT, MCX653106A-EFAT, and MCX683105AN-HDAT. Category Item Cards ConnectX-6 adapter card Accessories Adapter card short bracket... -

Page 21: Connectx-6 Pcie X16 Adapter Card For Liquid-Cooled Intel® Server System D50Tnp Platforms

Category Item Adapter card tall bracket (shipped assembled on the card) ConnectX-6 PCIe x16 Adapter Card for liquid-cooled Intel® Server System D50TNP Platforms Applies to MCX653105A-HDAL and MCX653106A-HDAL. Category Item Cards ConnectX-6 adapter card Accessories Adapter card short bracket Adapter card tall bracket (shipped assembled on the card) Accessory Kit with two 2 TIMs (MEB000386) ConnectX-6 Socket Direct Cards (2x PCIe x16) ... -

Page 22: Features And Benefits

Category Qty. Item Harnesses 35cm Cabline CA-II Plus harness (white) 35cm Cabline CA-II Plus harness (black) Retention Clip for Cablline harness (optional accessory) Adapter card short bracket Accessories Adapter card tall bracket (shipped assembled on the card) PCIe Auxiliary card short bracket PCIe Auxiliary card tall bracket (shipped assembled on the card) Features and Benefits ... - Page 23 Architecture Specification v1.3 compliant. Architecture Specification v1.3 compliant NVIDIA adapters comply with the following IEEE 802.3 standards: Up to 200 200GbE / 100GbE / 50GbE / 40GbE / 25GbE / 10GbE / 1GbE Gigabit Ethernet - IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet - IEEE 802.3by, Ethernet Consortium25, 50 Gigabit Ethernet, supporting all FEC modes...

- Page 24 PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary internal data copies between components on the PCIe bus (for example, NVIDIA from GPU to CPU), and therefore significantly reduces application run time. ConnectX-6 advanced acceleration technology enables higher cluster efficiency and PeerDirect™...

-

Page 25: Operating Systems/Distributions

• Tag Matching and Rendezvous Offloads High- • Adaptive Routing on Reliable Transport Performance • Burst Buffer Offloads for Background Checkpointing Accelerations Operating Systems/Distributions ConnectX-6 Socket Direct cards 2x PCIe x16 (OPNs: MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT) are not supported in Windows and WinOF-2. -

Page 26: Manageability

ConnectX-6 technology maintains support for manageability through a BMC. ConnectX-6 PCIe stand-up adapter can be connected to a BMC using MCTP over SMBus or MCTP over PCIe protocols as if it is a standard NVIDIA PCIe stand-up adapter. For configuring the adapter for the specific manageability solution in... -

Page 27: Interfaces

Interfaces InfiniBand Interface The network ports of the ConnectX®-6 adapter cards are compliant with the InfiniBand Architecture Specification, Release 1.3. InfiniBand traffic is transmitted through the cards' QSFP56 connectors. Ethernet Interfaces The adapter card includes special circuits to protect from ESD shocks to the card/server when plugging copper cables. The network ports of the ConnectX-6 adapter card are compliant with the IEEE 802.3 Ethernet standards listed in Features and Benefits. -

Page 28: Led Interface

LED Interface The adapter card includes special circuits to protect from ESD shocks to the card/server when plugging copper cables. There are two I/O LEDs per port: • LED 1 and 2: Bi-color I/O LED which indicates link status. LED behavior is described below for Ethernet and InfiniBand port configurations. •... - Page 29 Error Type Description LED Behavior Blinks until error is fixed C access to the networking ports fails Over-current Over-current condition of the Blinks until error is fixed networking ports Solid green Indicates a valid link with no active traffic Blinking green Indicates a valid link with active traffic LED1 and LED2 Link Status Indications - InfiniBand Protocol: LED Color and State...

-

Page 30: Heatsink Interface

ConnectX-6 technology maintains support for manageability through a BMC. ConnectX-6 PCIe stand-up adapter can be connected to a BMC using MCTP over SMBus protocol as if it is a standard NVIDIA PCIe stand-up adapter. For configuring the adapter for the specific manageability solution in use by the server, please contact NVIDIA Support. -

Page 31: Hardware Installation

Safety warnings are provided here in the English language. For safety warnings in other languages, refer to the Adapter Installation Safety Instructions document available on nvidia.com. Please observe all safety warnings to avoid injury and prevent damage to system components. Note that not all warnings are relevant to all models. -

Page 32: Installation Procedure Overview

Copper Cable Connecting/Disconnecting Some copper cables are heavy and not flexible, as such, they should be carefully attached to or detached from the connectors. Refer to the cable manufacturer for special warnings and instructions. Equipment Installation This equipment should be installed, replaced, or serviced only by trained and qualified personnel. Equipment Disposal The disposal of this equipment should be in accordance to all national laws and regulations. -

Page 33: System Requirements

Hardware Requirements Unless otherwise specified, NVIDIA products are designed to work in an environmentally controlled data center with low levels of gaseous and dust (particulate) contamination. The operating environment should meet severity level G1 as per ISA 71.04 for gaseous contamination and ISO 14644-1 class 8 for cleanliness level. -

Page 34: Airflow Requirements

ConnectX-6 Configuration Hardware Requirements PCIe x8/x16 A system with a PCI Express x8/x16 slot is required for installing the card. Cards for liquid-cooled Intel® Server System D50TNP platforms Intel® Server System D50TNP Platform with an available PCI Express x16 slot is required for installing the card. -

Page 35: Software Requirements

• Operating Systems/Distributions section under the Introduction section. • Software Stacks - NVIDIA OpenFabric software package MLNX_OFED for Linux, WinOF-2 for Windows, and VMware. See the Driver Installation section. Safety Precautions The adapter is being installed in a system that operates with voltages that can be lethal. Before opening the case of the system, observe the following precautions to avoid injury and prevent damage to system components. -

Page 36: Pre-Installation Checklist

• Make sure to use only insulated tools. • Verify that the system is powered off and is unplugged. • It is strongly recommended to use an ESD strap or other antistatic devices. Pre-Installation Checklist • Unpack the ConnectX-6 Card; Unpack and remove the ConnectX-6 card. Check against the package contents list that all the parts have been sent. Check the parts for visible damage that may have occurred during shipping. -

Page 37: Installation Instructions

Installation Instructions This section provides detailed instructions on how to install your adapter card in a system. Choose the installation instructions according to the ConnectX-6 configuration you have purchased. OPNs Installation Instructions MCX651105A-EDAT PCIe x8/16 Cards Installation Instructions MCX653105A-HDAT MCX653106A-HDAT MCX653105A-ECAT... -

Page 38: Cables And Modules

MCX654105A-HCAT Socket Direct (2x PCIe x16) Cards Installation Instructions MCX654106A-HCAT MCX654106A-ECAT MCX653105A-HDAL Cards for Intel Liquid-Cooled Platforms Installation Instructions MCX653106A-HDAL Cables and Modules Cable Installation All cables can be inserted or removed with the unit powered on. To insert a cable, press the connector into the port receptacle until the connector is firmly seated. Support the weight of the cable before connecting the cable to the adapter card. -

Page 39: Identifying The Card In Your System

6. To remove a cable, disengage the locks and slowly pull the connector away from the port receptacle. LED indicator will turn off when the cable is unseated. Identifying the Card in Your System On Linux Get the device location on the PCI bus by running lspci and locating lines with the string “Mellanox Technologies”: ConnectX-6 Card Configuration lspci Command Output Example Single-port Socket Direct Card (2x... - Page 40 On Windows Open Device Manager on the server. Click Start => Run, and then enter devmgmt.msc. Expand System Devices and locate your NVIDIA ConnectX-6 adapter card. Right click the mouse on your adapter's row and select Properties to display the adapter card properties window. Click the Details tab and select Hardware Ids (Windows 2012/R2/2016) from the Property pull-down menu.

- Page 41 In the Value display box, check the fields VEN and DEV (fields are separated by ‘&’). In the display example above, notice the sub-string “PCI\VEN_15B3&DEV_1003”: VEN is equal to 0x15B3 – this is the Vendor ID of NVIDIA; and DEV is equal to 1018 (for ConnectX-6) – this is a valid NVIDIA PCI Device ID.

-

Page 42: Pcie X8/16 Cards Installation Instructions

The list of NVIDIA PCI Device IDs can be found in the PCI ID repository at http://pci-ids.ucw.cz/read/PC/15b3. PCIe x8/16 Cards Installation Instructions Installing the Card Applies to OPNs MCX651105A-EDAT, MCX654105A-HCAT, MCX654106A-HCAT, MCX683105AN-HDAT, MCX653106A-ECAT and MCX653105A-ECAT. Please make sure to install the ConnectX-6 cards in a PCIe slot that is capable of supplying the required power and airflow as stated in Specifications. - Page 43 Step 2: Applying even pressure at both corners of the card, insert the adapter card in a PCI Express slot until firmly seated.

-

Page 44: Uninstalling The Card

Do not use excessive force when seating the card, as this may damage the chassis. Secure the adapter card to the chassis. Step 1: Secure the bracket to the chassis with the bracket screw. Uninstalling the Card Safety Precautions... - Page 45 The adapter is installed in a system that operates with voltages that can be lethal. Before uninstalling the adapter card, please observe the following precautions to avoid injury and prevent damage to system components. Remove any metallic objects from your hands and wrists. It is strongly recommended to use an ESD strap or other antistatic devices.

-

Page 46: Socket Direct (2X Pcie X16) Cards Installation Instructions

Socket Direct (2x PCIe x16) Cards Installation Instructions The hardware installation section uses the terminology of white and black harnesses to differentiate between the two supplied cables. Due to supply chain variations, some cards may be supplied with two black harnesses instead. To clarify the difference between these two harnesses, one black harness was marked with a “WHITE”... -

Page 47: Installing The Card

Installing the Card Applies to MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT. The installation instructions include steps that involve a retention clip to be used while connecting the Cabline harnesses to the cards. Please note that this is an optional accessory. ... - Page 48 Step 2: Plug the Cabline CA-II Plus harnesses on the ConnectX-6 adapter card while paying attention to the color-coding. As indicated on both sides of the card; plug the black harness to the component side and the white harness to the print side. Step 2: Verify the plugs are locked.

- Page 49 Step 3: Slide the retention clip latches through the cutouts on the PCB. The latches should face the annotation on the PCB. ...

- Page 50 Step 4: Clamp the retention clip. Verify both latches are firmly locked.

- Page 51 Step 5: Slide the Cabline CA-II Plus harnesses through the retention clip. Make sure that the clip opening is facing the plugs. ...

- Page 52 Step 6: Plug the Cabline CA-II Plus harnesses on the PCIe Auxiliary Card. As indicated on both sides of the Auxiliary connection card; plug the black harness to the component side and the white harness to the print side. Step 7: Verify the plugs are locked.

- Page 53 Step 8: Slide the retention clip through the cutouts on the PCB. Make sure latches are facing "Black Cable" annotation as seen in the below picture. Step 9: Clamp the retention clip. Verify both latches are firmly locked.

- Page 54 Connect the ConnectX-6 adapter and PCIe Auxiliary Connection cards in available PCI Express x16 slots in the chassis. Step 1: Locate two available PCI Express x16 slots. Step 2: Applying even pressure at both corners of the cards, insert the adapter card in the PCI Express slots until firmly seated.

- Page 55 Do not use excessive force when seating the cards, as this may damage the system or the cards. Step 3: Applying even pressure at both corners of the cards, insert the Auxiliary Connection card in the PCI Express slots until firmly seated.

- Page 56 Secure the ConnectX-6 adapter and PCIe Auxiliary Connection Cards to the chassis. Step 1: Secure the brackets to the chassis with the bracket screw.

-

Page 57: Uninstalling The Card

Uninstalling the Card Safety Precautions The adapter is installed in a system that operates with voltages that can be lethal. Before uninstalling the adapter card, please observe the following precautions to avoid injury and prevent damage to system components. Remove any metallic objects from your hands and wrists. It is strongly recommended to use an ESD strap or other antistatic devices. - Page 58 Verify that the system is powered off and unplugged. Wait 30 seconds. To remove the card, disengage the retention mechanisms on the bracket (clips or screws). 4. Holding the adapter card from its center, gently pull the ConnectX-6 and Auxiliary Connections cards out of the PCI Express slot.

-

Page 59: Cards For Intel Liquid-Cooled Platforms Installation Instructions

Cards for Intel Liquid-Cooled Platforms Installation Instructions The below instructions apply to ConnectX-6 cards designed for Intel liquid-cooled platforms with ASIC interposer cooling mechanism. OPNs: MCX653105A- HDAL and MCX653106A-HDAL. The below figures are for illustration purposes only. The below instructions should be used in conjunction with the server's documentation. Installing the Card ... - Page 60 Pay extra attention to the black bumpers located on the print side of the card. Failure to do so may harm the bumpers. Apply the supplied thermal pad (one of the two) on top of the ASIC interposer or onto the coldplate. ...

- Page 61 Ensure the thermal pad is in place and intact. Once the thermal pad is applied to the ASIC interposer, the non-tacky side should be visible on the card's faceplate.

- Page 62 Gently peel the liner of the pad's non-tacky side visible on the card's faceplate. Failure to do so may degrade the thermal performance of the product. Install the adapter into the riser and attach the card to the PCIe x16 slot. Disengage the adapter riser from the blade.

- Page 63 Vertically insert the riser that populates the adapter card into the server blade.

- Page 64 Applying even pressure on the riser, gently insert the riser into the server. Secure the riser with the supplied screws. Please refer to the server blade documentation for more information.

-

Page 65: Driver Installation

• Windows Driver Installation • VMware Driver Installation Linux Driver Installation This section describes how to install and test the MLNX_OFED for Linux package on a single server with a NVIDIA ConnectX-6 adapter card installed. Prerequisites Requirements Description Platforms A server platform with a ConnectX-6 InfiniBand/Ethernet adapter card installed. -

Page 66: Downloading Nvidia Ofed

Downloading NVIDIA OFED Verify that the system has a NVIDIA network adapter installed by running lscpi command. The below table provides output examples per ConnectX-6 card configuration. ConnectX-6 Card Configuration Single-port Socket Direct Card (2x PCIe x16) [root@mftqa-009 ~]# lspci |grep mellanox -i a3:00.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6]... -

Page 67: Installing Mlnx_Ofed

The image’s name has the format . MLNX_OFED_LINUX-<ver>-<OS label><CPU arch>.iso You can download and install the latest OpenFabrics Enterprise Distribution (OFED) software package available via the NVIDIA web site at nvidia.com/en-us/networking → Products → Software → InfiniBand Drivers → NVIDIA MLNX_OFED Scroll down to the Download wizard, and click the Download tab. - Page 68 • Installs the MLNX_OFED_LINUX binary RPMs (if they are available for the current kernel) • Identifies the currently installed InfiniBand and Ethernet network adapters and automatically upgrades the firmware Note: To perform a firmware upgrade using customized firmware binaries, a path can be provided to the folder that contains the firmware binary files, by running --fw-image-dir.

- Page 69 If you regenerate kernel modules for a custom kernel (using ), the packages installation will not involve --add-kernel-support automatic regeneration of the initramfs. In some cases, such as a system with a root filesystem mounted over a ConnectX card, not regenerating the initramfs may even cause the system to fail to reboot.

-

Page 70: Installation Procedure

For the list of installation options, run: ./mlnxofedinstall --h Installation Procedure This section describes the installation procedure of MLNX_OFED on NVIDIA adapter cards. Log in to the installation machine as root. Mount the ISO image on your machine. host1# mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt Run the installation script. - Page 71 For unattended installation, use the --force installation option while running the MLNX_OFED installation script: /mnt/mlnxofedinstall --force MLNX_OFED for Ubuntu should be installed with the following flags in chroot environment: ./mlnxofedinstall --without-dkms --add-kernel-support --kernel <kernel version in chroot> --without-fw-update --force For example: ./mlnxofedinstall --without-dkms --add-kernel-support --kernel 3.13.0-85-generic --without-fw-update --force Note that the path to kernel sources (--kernel-sources) should be added if the sources are not in their default location.

- Page 72 Status: No matching image found Error message #2: The firmware for this device is not distributed inside NVIDIA driver: 0000:01:00.0 (PSID: IBM2150110033) To obtain firmware for this device, please contact your HW vendor. Case A: If the installation script has performed a firmware update on your network adapter, you need to either restart the driver or reboot your system before the firmware update can take effect.

-

Page 73: Installation Results

In case your machine has an unsupported network adapter device, no firmware update will occur and the error message below will be printed. "The firmware for this device is not distributed inside NVIDIA driver: 0000:01:00.0 (PSID: IBM2150110033) To obtain firmware for this device, please contact your HW vendor."... -

Page 74: Driver Load Upon System Boot

Driver Load Upon System Boot Upon system boot, the NVIDIA drivers will be loaded automatically. To prevent the automatic load of the NVIDIA drivers upon system boot: Add the following lines to the "/etc/modprobe.d/mlnx.conf" file. blacklist mlx5_core blacklist mlx5_ib Set “ONBOOT=no” in the "/etc/infiniband/openib.conf" file. -

Page 75: Installation Logging

In case your machine has an unsupported network adapter device, no firmware update will occur and the error message below will be printed. "The firmware for this device is not distributed inside NVIDIA driver: 0000:01:00.0 (PSID: IBM2150110033) To obtain firmware for this device, please contact your HW vendor."... -

Page 76: Additional Installation Procedures

Mount the ISO image on your machine and copy its content to a shared location in your network. # mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt Download and install NVIDIA's GPG-KEY: The key can be downloaded via the following link: http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox # wget http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox... - Page 77 warning: rpmts_HdrFromFdno: Header V3 DSA/SHA1 Signature, key ID 6224c050: NOKEY Retrieving key from file:///repos/MLNX_OFED/<MLNX_OFED file>/RPM-GPG-KEY-Mellanox Importing GPG key 0x6224C050: Userid: "Mellanox Technologies (Mellanox Technologies - Signing Key v2) <support@mellanox.com>" From : /repos/MLNX_OFED/<MLNX_OFED file>/RPM-GPG-KEY-Mellanox this ok [y/N]: Check that the key was successfully imported. # rpm -q gpg-pubkey --qf '%{NAME}-%{VERSION}-%{RELEASE}\t%{SUMMARY}\n' | grep Mellanox...

- Page 78 # mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt Build the packages with kernel support and create the tarball. # /mnt/mlnx_add_kernel_support.sh --make-tgz <optional --kmp> -k $(uname -r) -m /mnt/ Note: This program will create MLNX_OFED_LINUX TGZ rhel7.6 under /tmp directory. Do you want to continue?[y/N]:y See log file /tmp/mlnx_iso.4120_logs/mlnx_ofed_iso.4120.log ...

- Page 79 repo id repo name status mlnx_ofed MLNX_OFED Repository rpmforge RHEL 6Server - RPMforge.net - dag 4,597 repolist: 8,351 Installing MLNX_OFED Using the YUM Tool After setting up the YUM repository for MLNX_OFED package, perform the following: View the available package groups by invoking: # yum search mlnx-ofed- mlnx-ofed-all.noarch : MLNX_OFED all installer package...

- Page 80 (User Space packages only where: mlnx-ofed-all Installs all available packages in MLNX_OFED mlnx-ofed-basic Installs basic packages required for running NVIDIA cards mlnx-ofed-guest Installs packages required by guest OS mlnx-ofed-hpc Installs packages required for HPC mlnx-ofed-hypervisor Installs packages required by hypervisor OS...

- Page 81 mlnx-ofed-guest-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED guest installer package for kernel 3.17.4-301. fc21.x86_64 (without KMP support) mlnx-ofed-hpc-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED hpc installer package for kernel 3.17.4-301.fc21 .x86_64 (without KMP support) mlnx-ofed-hypervisor-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED hypervisor installer package for kernel 3.17.4-301.fc21.x86_64 (without KMP support) mlnx-ofed-vma-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED vma installer package for kernel 3.17.4-301.fc21 .x86_64 (without KMP support)

-

Page 82: Installing Mlnx_Ofed Using Apt-Get

Log into the installation machine as root. Extract the MLNX_OFED package on a shared location in your network. It can be downloaded from https://www.nvidia.com/en-us/networking/ → Products → Software→ InfiniBand Drivers. Create an apt-get repository configuration file called "/etc/apt/sources.list.d/mlnx_ofed.list" with the following content: deb file:/<path to extracted MLNX_OFED package>/DEBS ./ Download and install NVIDIA's Technologies GPG-KEY. ... - Page 83 # apt-key list 1024D/A9E4B643 2013-08-11 Mellanox Technologies <support@mellanox.com> 1024g/09FCC269 2013-08-11 Update the apt-get cache. # sudo apt-get update Setting up MLNX_OFED apt-get Repository Using --add-kernel-support Log into the installation machine as root. Mount the ISO image on your machine and copy its content to a shared location in your network. # mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt Build the packages with kernel support and create the tarball. ...

- Page 84 # tar -xvf /tmp/MLNX_OFED_LINUX-5.2-0.5.5.0-rhel7.6-x86_64-ext.tgz Create an apt-get repository configuration file called "/etc/apt/sources.list.d/mlnx_ofed.list" with the following content: deb [trusted=yes] file:/<path to extracted MLNX_OFED package>/DEBS ./ Update the apt-get cache. # sudo apt-get update Installing MLNX_OFED Using the apt-get Tool After setting up the apt-get repository for MLNX_OFED package, perform the following: View the available package groups by invoking: ...

- Page 85 mlnx-ofed-all-user-only - MLNX_OFED all-user-only installer package (User Space packages only) mlnx-ofed-vma-eth - MLNX_OFED vma-eth installer package (with DKMS support) mlnx-ofed-vma - MLNX_OFED vma installer package (with DKMS support) mlnx-ofed-dpdk-upstream-libs-user-only - MLNX_OFED dpdk-upstream-libs-user-only installer package (User Space packages only) mlnx-ofed-basic-user-only - MLNX_OFED basic-user-only installer package (User Space packages only) mlnx-ofed-basic-exact - MLNX_OFED basic installer...

-

Page 86: Performance Tuning

• MCX654106A-HCAT • MCX654106A-ECAT For Windows, download and install the latest WinOF-2 for Windows software package available via the NVIDIA website at: WinOF-2 webpage. Follow the installation instructions included in the download package (also available from the download page). The snapshots in the following sections are presented for illustration purposes only. The installation interface may slightly vary, depending on the operating... -

Page 87: Software Requirements

Software Requirements Description Package Windows Server 2022 MLNX_WinOF2-<version>_All_x64.exe Windows Server 2019 Windows Server 2016 Windows Server 2012 R2 Windows 11 Client (64 bit only) Windows 10 Client (64 bit only) Windows 8.1 Client (64 bit only) Note: The Operating System listed above must run with administrator privileges. Downloading WinOF-2 Driver To download the .exe file according to your Operating System, please follow the steps below: Obtain the machine architecture. -

Page 88: Installing Winof-2 Driver

Go to the WinOF-2 web page at: https://www.nvidia.com/en-us/networking/ > Products > Software > InfiniBand Drivers (Learn More) > Nvidia WinOF-2. Download the .exe image according to the architecture of your machine (see Step 1). The name of the .exe is in the following format: MLNX_WinOF2-<version>_<arch>.exe. - Page 89 MLNX_WinOF2_<revision_version>_All_Arch.exe /v" MT_SKIPFWUPGRD=1" [Optional] If you do not want to install the Rshim driver, run. MLNX_WinOF2_<revision_version>_All_Arch.exe /v"...

- Page 90 7. Read and accept the license agreement and click Next.

- Page 91 8. Select the target folder for the installation.

- Page 92 • If the user has a standard NVIDIA® card with an older firmware version, the firmware will be updated accordingly. However, if the user has both an OEM card and a NVIDIA® card, only the NVIDIA® card will be updated.

- Page 93 10. Select a Complete or Custom installation, follow Step a onward.

- Page 94 Select the desired feature to install: • Performances tools - install the performance tools that are used to measure performance in user environment • Documentation - contains the User Manual and Release Notes • Management tools - installation tools used for management, such as mlxstat •...

- Page 95 b. Click Next to install the desired tools.

- Page 96 Click Install to start the installation. In case firmware upgrade option was checked in Step 7, you will be notified if a firmware upgrade is required (see ). ...

-

Page 98: Unattended Installation

Click Finish to complete the installation. Unattended Installation If no reboot options are specified, the installer restarts the computer whenever necessary without displaying any prompt or warning to the user. To control the reboots, use the /norestart or /forcerestart standard command-line options. The following is an example of an unattended installation session. - Page 99 Install the driver. Run: MLNX_WinOF2-[Driver/Version]_<revision_version>_All_-Arch.exe /S /v/qn [Optional] Manually configure your setup to contain the logs option: MLNX_WinOF2-[Driver/Version]_<revision_version>_All_-Arch.exe /S /v/qn /v”/l*vx [LogFile]" [Optional] if you wish to control whether to install ND provider or not (i.e., MT_NDPROPERTY default value is True). MLNX_WinOF2-[Driver/Version]_<revision_version>_All_Arch.exe /vMT_NDPROPERTY=1 [Optional] If you do not wish to upgrade your firmware version (i.e.,MT_SKIPFWUPGRD default value is False). MLNX_WinOF2-[Driver/Version]_<revision_version>_All_Arch.exe /vMT_SKIPFWUPGRD=1 [Optional] If you do not want to install the Rshim driver, run.

-

Page 100: Firmware Upgrade

/v" SKIPUNSUPPORTEDDEVCHECK=1" Firmware Upgrade If the machine has a standard NVIDIA® card with an older firmware version, the firmware will be automatically updated as part of the NVIDIA® WinOF-2 package installation. For information on how to upgrade firmware manually, please refer to MFT User Manual. ... -

Page 101: Installing Native Esxi Driver For Vmware Vsphere

4.16.8.8-1OEM.650.0.0.4240417 PartnerSupported 2017-01-31 nmlx5-rdma 4.16.8.8-1OEM.650.0.0.4240417 PartnerSupported 2017-01-31 After the installation process, all kernel modules are loaded automatically upon boot. Removing Earlier NVIDIA Drivers Please unload the previously installed drivers before removing them. To remove all the drivers:... -

Page 102: Firmware Programming

Log into the ESXi server with root permissions. List all the existing NATIVE ESXi driver modules. (See Step 4 in Installing NATIVE ESXi Driver for VMware vSphere.) Remove each module: #> esxcli software vib remove -n nmlx5-rdma #> esxcli software vib remove -n nmlx5-core ... -

Page 103: Troubleshooting

Troubleshooting General Troubleshooting • Ensure that the adapter is placed correctly Server unable to find the adapter • Make sure the adapter slot and the adapter are compatible Install the adapter in a different PCI Express slot • Use the drivers that came with the adapter or download the latest •... -

Page 104: Linux Troubleshooting

-d <mst_device> q ibstat Ports Information ibv_devinfo To download the latest firmware version, refer to the NVIDIA Update and Query Utility. Firmware Version Upgrade cat /var/log/messages Collect Log File dmesg >> system.log journalctl (Applicable on new operating systems) -

Page 105: Windows Troubleshooting

Windows Troubleshooting From the Windows desktop choose the Start menu and run: msinfo32 Environment Information To export system information to a text file, choose the Export option from the File menu. Assign a file name and save. Download and install MFT: MFT Documentation Mellanox Firmware Tool (MFT) Refer to the User Manual for installation instructions. -

Page 106: Updating Adapter Firmware

To check that your card is programmed with the latest available firmware version, download the mlxup firmware update and query utility. The utility can query for available NVIDIA adapters and indicate which adapters require a firmware update. If the user confirms, mlxup upgrades the firmware using embedded images. - Page 107 Restart needed for updates to take effect. Log File: /var/log/mlxup/mlxup-yyyymmdd.log...

-

Page 108: Monitoring

Monitoring Unable to render include or excerpt-include. Could not retrieve page. -

Page 109: Specifications

Specifications MCX651105A-EDAT Specifications Please make sure to install the ConnectX-6 card in a PCIe slot that is capable of supplying the required power and airflow as stated in the below table. Adapter Card Size: 6.6 in. x 2.71 in. (167.65mm x 68.90mm) - Page 110 RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package.

-

Page 111: Mcx653105A-Hdat Specifications

MCX653105A-HDAT Specifications Please make sure to install the ConnectX-6 card in a PCIe slot that is capable of supplying the required power and airflow as stated in the below table. Adapter Card Size: 6.6 in. x 2.71 in. (167.65mm x 68.90mm) Physical Connector: Single QSFP56 InfiniBand and Ethernet (copper and optical) InfiniBand: IBTA v1.4... - Page 112 Cable Type Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 350 LFM / 55°C 250 LFM / 35°C NVIDIA Active 4.7W Cables 250 LFM / 35°C 500 LFM / 55°C Temperature Operational 0°C to 55°C Environmental Non-operational -40°C to 70°C...

-

Page 113: Mcx653106A-Hdat Specifications

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. c. For engineering samples - add 250LFM The non-operational storage temperature specifications apply to the product without its package. - Page 114 Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 400 LFM / 55°C 300 LFM / 35°C NVIDIA Active 4.7W Cables 950 LFM / 55°C 300 LFM / 35°C 600 LFM / 48°Cd Temperature Operational 0°C to 55°C...

-

Page 115: Mcx653105A-Hdal Specifications

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. c. For both operational and non-operational states. - Page 116 RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. c. For both operational and non-operational states.

-

Page 117: Mcx653106A-Hdal Specifications

MCX653106A-HDAL Specifications Please make sure to install the ConnectX-6 card in an liquid-cooled Intel® Server System D50TNP platform. Adapter Card Size: 6.6 in. x 2.71 in. (167.65mm x 68.90mm) Physical Connector: Dual QSFP56 InfiniBand and Ethernet (copper and optical) InfiniBand: IBTA v1.4 Protocol Auto-Negotiation: 1X/2X/4X SDR (2.5Gb/s per lane), DDR (5Gb/s per lane), QDR (10Gb/s per lane), FDR10 (10.3125Gb/s per lane), FDR (14.0625Gb/s per lane), EDR (25Gb/s Support... - Page 118 Please refer to ConnectX-6 VPI Power Specifications (requires NVONline login credentials) Maximum power available through QSFP56 port: 5W Airflow Direction Airflow (LFM) / Ambient Cable Type Temperature Heatsink to Port Port to Heatsink Passive Cables NVIDIA Active 4.7W Cables Temperature Operational 0°C to 55°C Environmen Non-operational -40°C to 70°C Humidity Operational 10% to 85% relative humidity ...

-

Page 119: Mcx653105A-Ecat Specifications

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. c. For both operational and non-operational states. - Page 120 Cable Type Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 300 LFM / 55°C 200 LFM / 35°C NVIDIA Active 2.7W Cables 300 LFM / 55°C 200 LFM / 35°C Temperature Operational 0°C to 55°C Environmental Non-operational -40°C to 70°C...

-

Page 121: Mcx653106A-Ecat Specifications

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package. - Page 122 Cable Type Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 350 LFM / 55°C 250 LFM / 35°C NVIDIA Active 2.7W Cables 550 LFM / 55°C 250 LFM / 35°C Temperature Operational 0°C to 55°C Environmental Non-operational -40°C to 70°C...

-

Page 123: Mcx654105A-Hcat Specifications

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package. - Page 124 Cable Type Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 600 LFM / 55°C 350 LFM / 35°C NVIDIA Active 4.7W Cables 350 LFM / 35°C 600 LFM / 55°C Temperature Operational 0°C to 55° Environmental Non-operational -40°C to 70°C...

-

Page 125: Mcx654106A-Hcat Specifications

RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. c. For engineering samples - add 250LFM The non-operational storage temperature specifications apply to the product without its package. - Page 126 Ethernet: 200GBASE-CR4, 200GBASE-KR4, 200GBASE-SR4, 100GBASE-CR4, 100GBASE-CR2, 100GBASE-KR4, 100GBASE-SR4, 50GBASE-R2, 50GBASE-R4, 40GBASE-CR4, 40GBASE-KR4, 40GBASE-SR4, 40GBASE-LR4, 40GBASE-ER4, 40GBASE-R2, 25GBASE-R, 20GBASE-KR2, 10GBASE-LR,10GBASE-ER, 10GBASE-CX4, 10GBASE-CR, 10GBASE-KR, SGMII, 1000BASE-CX, 1000BASE-KX, 10GBASE-SR Data Rate InfiniBand SDR/DDR/QDR/FDR/EDR/HDR100/HDR Ethernet 1/10/25/40/50/100/200 Gb/s Gen3: SERDES @ 8.0GT/s, x16 lanes (2.0 and 1.1 compatible) Voltage: 12V, 3.3VAUX Adapter Card Power Power...

- Page 127 RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package.

-

Page 128: Mcx654106A-Ecat Specifications

MCX654106A-ECAT Specifications Please make sure to install the ConnectX-6 card in a PCIe slot that is capable of supplying the required power and airflow as stated in the below table. Adapter Card Size: 6.6 in. x 2.71 in. (167.65mm x 68.90mm) Physical Auxiliary PCIe Connection Card Size: 5.09 in. - Page 129 EMC: CE / FCC / VCCI / ICES / RCM / KC RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product.

-

Page 130: Mcx653105A-Efat Specifications

b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package. MCX653105A-EFAT Specifications Please make sure to install the ConnectX-6 card in a PCIe slot that is capable of supplying the required power and airflow as stated in the below table. - Page 131 RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. The non-operational storage temperature specifications apply to the product without its package.

-

Page 132: Mcx653106A-Efat Specifications

MCX653106A-EFAT Specifications Please make sure to install the ConnectX-6 card in a PCIe slot that is capable of supplying the required power and airflow as stated in the below table. For power specifications when using a single-port configuration, please refer to MCX653105A-EFAT Specifications. - Page 133 Cable Type Heatsink to Port Port to Heatsink Airflow (LFM) / Ambient Temperature Passive Cables 350 LFM / 55°C 250 LFM / 35°C NVIDIA Active 2.75W Cables 550 LFM / 55°C 250 LFM / 35°C Temperature Operational 0°C to 55°C Environmental Non-operational -40°C to 70°C...

-

Page 134: Mcx683105An-Hdat Specifications

RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load. - Page 135 RoHS: RoHS Compliant Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product. b. Typical power for ATIS traffic load.

-

Page 136: Adapter Card And Bracket Mechanical Drawings And Dimensions

Adapter Card and Bracket Mechanical Drawings and Dimensions All dimensions are in millimeters. The PCB mechanical tolerance is +/- 0.13mm. Adapter Cards ConnectX-6 PCIe x16 Dual-port Adapter Card ConnectX-6 PCIe x8 Dual-port Adapter Card ConnectX-6 PCIe x16 Single-port Adapter Card ConnectX-6 PCIe x8 Single-port Adapter Card ... - Page 137 MCX653105A-HDAL Auxiliary PCIe Connection Card ...

-

Page 138: Brackets Dimensions

Brackets Dimensions Dual-port Adapter Tall Bracket Dual-port Adapter Short Bracket... - Page 139 Single-port Adapter Tall Bracket Single-port Adapter Short Bracket...

-

Page 140: Pci Express Pinouts Description For Single-Slot Socket Direct Card

PCI Express Pinouts Description for Single-Slot Socket Direct Card This section applies to ConnectX-6 single-slot cards (MCX653105A-EFAT and MCX653106A-EFAT). ConnectX-6 single-slot Socket Direct cards offer improved performance to dual-socket servers by enabling direct access from each CPU in a dual-socket server to the network through its dedicated PCIe interface. -

Page 141: Finding The Guid/Mac On The Adapter Card

Finding the GUID/MAC on the Adapter Card Each NVIDIA adapter card has a different identifier printed on the label: serial number and the card MAC for the Ethernet protocol and the card GUID for the InfiniBand protocol. VPI cards have both a GUID and a MAC (derived from the GUID). ... -

Page 143: Document Revision History

Dec. 2020 Updated installation instructions. Dec. 2020 Added MCX653105A-HDAL and MCX653106A-HDAL support across the document. Mar. 2020 Added MCX651105A-EDAT support across the document. Sep. 2019 Added a note to the hardware installation instructions. Aug. 2019 Updated "Package Contents" and "Hardware Installation" Aug. 2019 Updated "PCI Express Pinouts Description". - Page 144 Date Comments/Changes Jun. 2019 • Added MCX653105A-HDAT and MCX654105A-HCAT to the UM. • Updated "LED Interfaces". Jun. 2019 • Added a note to "Windows Driver Installation". • Added short and tall brackets dimensions. May. 20.19 • Added mechanical drawings to "Specifications". •...

- Page 145 NVIDIA product and may result in additional or different conditions and/or requirements beyond those contained in this document. NVIDIA accepts no liability related to any default, damage, costs, or problem which may be based on or attributable to: (i) the use of the NVIDIA product in any manner that is contrary to this document or (ii) customer product designs.

- Page 146 INDIRECT, SPECIAL, INCIDENTAL, PUNITIVE, OR CONSEQUENTIAL DAMAGES, HOWEVER CAUSED AND REGARDLESS OF THE THEORY OF LIABILITY, ARISING OUT OF ANY USE OF THIS DOCUMENT, EVEN IF NVIDIA HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. Notwithstanding any damages that customer might incur for any reason whatsoever, NVIDIA’s aggregate and cumulative liability towards customer for the products described herein shall be limited in...

Need help?

Do you have a question about the MCX651105A-EDAT and is the answer not in the manual?

Questions and answers